Sat 22 Sep 2012

Unnatural Transformations and Quantifiers

Posted by Dan Doel under Category Theory , Haskell , Kan Extensions , Mathematics , Monads[3] Comments

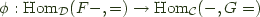

Recently, a fellow in category land discovered a fact that we in Haskell land have actually known for a while (in addition to things most of us probably don't). Specifically, given two categories  and

and  , a functor

, a functor  , and provided some conditions in

, and provided some conditions in  hold, there exists a monad

hold, there exists a monad  , the codensity monad of

, the codensity monad of  .

.

In category theory, the codensity monad is given by the rather frightening expression:

![$ T^G(a) = \int_r \left[\mathcal{D}(a, Gr), Gr\right] $ $ T^G(a) = \int_r \left[\mathcal{D}(a, Gr), Gr\right] $](../../../latex/050bb5034bed82159df4c52c89c07f3c.png)

, and

, and  and a natural isomorphism:

and a natural isomorphism:

the left adjoint functor, and

the left adjoint functor, and  an adjoint pair, and write this relationship as

an adjoint pair, and write this relationship as